One of the more difficult challenges in robotics is the so-called “kidnapped robot problem.” Imagine you are blindfolded and taken by car to the home of one of your friends but you don’t know which one. When the blindfold is removed, your challenge is to recognize where you are. Chances are you’ll be able to determine your location, although you might have to look around a bit to get your bearings. How is it that you are able to recognize a familiar place so easily?

It’s not hard to imagine that your brain uses visual cues to recognize your surroundings. For example, you might recognize a particular painting on the wall, the sofa in front of the TV, or simply the color of the walls. What’s more, assuming you have some familiarity with the location, a few glances would generally be enough to conjure up a “mental map” of the entire house. You would then know how to get from one room to another or where the bathrooms are located.

Over the past few years, Mathieu Labbé from the University of Sherbrooke in Québec has created a remarkable set of algorithms for automated place learning and SLAM (Simultaneous Localization and Mapping) that depend on visual cues similar to what might be used by humans and other animals. He also employs a memory management scheme inspired by concepts from the field of Psychology called short term and long term memory. His project is called RTAB-Map for “Real Time Appearance Based Mapping” and the results are very impressive.

Real Time Appearance Based Mapping (RTAB-Map)

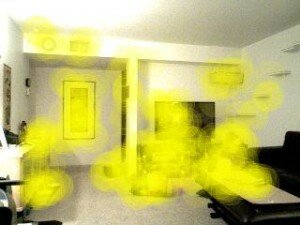

The two images below are taken from a typical mapping session using Pi Robot and RTAB-Map:

The picture on the left is the color image seen through the camera. In this case, Pi is using an Asus Xtion Pro depth camera set at a fairly low resolution of 320×240 pixels. On the right is the same image where the key visual features are highlighted with overlapping yellow discs. The visual features used by RTAB-Map can be computed using a number of popular techniques from computer vision including SIFT, SURF, BRIEF, FAST, BRISK, ORB or FREAK. Most of these algorithms look for large changes in intensity in different directions around a point in the image. Notice therefore that there are no yellow discs centered on the homogeneous parts of the image such as the walls, ceiling or floor. Instead, the discs overlap areas where there are abrupt changes in intensity such as the corners of the picture on the far wall. Corner-like features tend to be stable properties of a given location and can be easily detected even under different lighting conditions or when the robot’s view is from a different angle or distance from an object.

RTAB-Map records these collections of visual features in memory as the robot roams about the area. At the same time, a machine learning technique known as the “bag of words model” looks for patterns in the features that can then be used to classify the various images as belonging to one location or another. For example, there may be a hundred different video frames like the one shown above but from slightly different viewpoints that all contain visual features similar enough to assign to the same location. The following image shows two such frames side by side:

Here we see two different views from essentially the same location. The pink discs indicate visual features that both images have in common and, as we would expect from these two views, there are quite a few shared features. Based on the number of shared features and their geometric relations to one another, we can determine if the two views should be assigned to the same location or not. In this way, only a subset of the visual features needs to be stored in long term memory while still being able to recognize a location from many different viewpoints. As a result, RTAB-Map can map out large areas such as an entire building or an outdoor campus without requiring an excessive amount of memory storage or processing power to create or use the map.

Note that even though RTAB-Map uses visual features to recognize a location, it is not storing representations of human-defined categories such as “painting”, “TV”, “sofa”, etc. The features we are discussing here are more like the receptive field responses found in lower levels of the visual cortex in the brain. Nonetheless, when enough of these features have been recorded from a particular view in the past, they can be matched with similar features in a slightly different view as shown above.

RTAB-Map can stitch together a 3-dimensional representation of the robot’s surroundings using these collections of visual features and their geometric relations. The Youtube video below shows the resulting “mental map” of a few rooms in a house:

The next video demonstrates a live RTAB-Map session where Pi Robot has to localize himself after been set down in a random location. Prior to making the video, Pi Robot was driven around a few rooms in a house while RTAB-Map created a 3D map based on the visual features detected. Pi was then turned off (sorry dude!), moved to a random location within one of the rooms, then turned on again. Initially, Pi does not know where he is. So he drives around for a short distance gathering visual cues until, suddenly, the whole layout comes back to him and the full floor plan lights up. At that point we can set navigation goals for Pi and he autonomously makes his way from one goal to another while avoiding obstacles.

References:

- Mathieu Labbé, Student Member, IEEE, François Michaud, Member, IEEE, Appearance-Based Loop Closure Detection for Online Large-Scale and Long-Term Operation

- Mathieu Labbé and François Michaud, Online Global Loop Closure Detection for Large-Scale Multi-Session Graph-Based SLAM

By JON hauris December 3, 2015 - 8:35 pm

can you please tell me if the base of the Pi Robot is a Turtlebot? Also, does you book on ROS By Example cover applicatins to Turtlebot. If not what base are you using and what is covered in your book? Thanks, Jon

By Patrick Goebel December 4, 2015 - 7:53 am

Pi Robot currently uses a TurtleBot 2 base (a.k.a Kobuki). Most of the ROS examples covered in my two books use a simulated TurtleBot to illustrate the code. Some of the examples also use a real TurtleBot. You can see a detailed list of the topics covered in the books by clicking the table of contents links beside the book covers on this site.

By JON hauris December 3, 2015 - 8:39 pm

I am a member of your community but I cannot remember how to get to the discussion forums. Can you please remind me. Thanks, Jon

By Patrick Goebel December 4, 2015 - 7:55 am

The ROS By Example forum can be found at:

By March 27, 2016 - 12:38 pm

An impressive share, I just given this onto a colleague who was doing a little analysis on this. And he in fact bought me breakfast because I found it for him.. smile. So let me reword that: Thnx for the treat! But yeah Thnkx for spending the time to discuss this, I feel strongly about it and love reading more on this topic. If possible, as you become expertise, would you mind updating your blog with more details? It is highly helpful for me. Big thumb up for this blog post!

By November 8, 2016 - 4:37 am

Hi Patrick.

I have all your 2 books (paper + pdf for hydro and indigo !! wonderfukl)

you made me learn this fabulous world !! with my QBO robot !!

Now, did you make a tuto for RTABMAT ?

what to do with 3D map (need of visual goals points on rtab gui ?)

A howto could be a good present for Christmas (and an object reco too. lol)

Thanks again Patrick !

ps : waiting to buy Ros by examples indigo Volume 3 !!!

By Patrick Goebel November 8, 2016 - 6:52 pm

Hi Vincent. Thank you for your kind remarks. I haven’t written a tutorial for RTAB-MAP but Mathieu Labbe’s tutorials (http://wiki.ros.org/rtabmap_ros#Tutorials) are quite easy to follow.

By December 23, 2016 - 8:22 am

Hi Patrick,

Sorry for my question, but it could help some of us…

I have a RAZOR 9DOF imu, mounted on usb on my robot (a QBO),

Trying to use it with my encoders and robot pose ekf, for better estimation moves.

imu working, publishing to imu_state/data GOOD

But i don’t have success with urdf or xacro :

my imu always appears with a 45° in Z axis (fixed in right position on QBO) on RVIZ

So the mapping does rounded wrong walls…..bad mapping.

I think that I should link all the robot to the IMU, to make it parent. (but what to do with base_link/footprint ?)

I saw that you tried this imu, so, do you have examples of integration in bringup or description ?

Thanks Patrick, and merry Christmas.

PS : always waiting for your next book…vol3 lol

By Patrick Goebel January 5, 2017 - 7:49 am

Hi Vincent. I haven’t used that IMU in quite a few years. You would probably get good answers to your question on http://answers.ros.org.